Survey Results Pt. 4: Philosophy

In my previous three posts I revealed the results to sections 1 - 4 of my Manifold survey. We had a lot of surprises, like the Democrat:Republican ratio being even bigger than expected, Destiny getting second-to-last in the favorite YouTuber competition, and Manifold, while not being just a bunch of white guys, still having a more skewed gender ratio than Pat’s survey suggested.

This post will discuss the results of Section 5, which asked for Manifolders’ opinions on a variety of philosophical questions. Many of these were also asked on the PhilPapers Survey, so we can compare Manifolders’ answers to those of professional philosophers.

Free Will

The first question asked, “Which of the following options best describes your position on free will?” the options were:

Compatibilism: Free will and determinism are compatible, i.e., it is metaphysically possible for free will to exist in a deterministic universe.

Compatibilism, but I still think free will doesn't exist for some other reason.

Hard determinism: Free will is metaphysically possible, but not compatible with determinism, and either determinism is true, or it's close enough to being true to rule out the existence of free will.

Libertarianism (not to be confused with the political ideology of the same name): Free will is incompatible with determinism, but it exists anyway. Therefore, determinism is false, and freely willed decisions are not determined by prior conditions.

Impossibilism: Free will is not metaphysically possible.

Incompatibilist and believe that free will doesn't exist, but my reasoning isn't related to determinism or to free will being metaphysically impossible.

There is no fact of the matter on whether free will exists and/or the concept is meaningless.

Other

Not sure

Here are the responses from the survey:

In total, that’s 43 responses from compatibilists who believe in free will, 2 compatibilists who don’t believe in free will, 22 hard determinists, 7 libertarians, 17 impossibilists, 2 incompatibilists who reject free will for other reasons, 43 people who don’t believe free will is a meaningful concept or think there is no fact as to whether it exists, 6 people who selected “Other”, and 17 who aren’t sure. In total, that gives us 50 people who believe in free will and 43 who don’t. I’m not counting the people who think there’s no fact of the matter, or the “Other”s and “Not sure”s, towards either group.

So, out of the people who have a stated opinion and believe free will is a meaningful concept, about 54% believe in free will, and 48% are some version of compatibilist. But if “there is no fact of the matter” responses are counted, neither side has a majority: Then we have 37% free will believers, and 32% each of non-free-will-believers and people who think there’s no fact of the matter. 33% of these respondents are some type of compatibilist, and 35% are incompatibilists. In other words, Manifolders are pretty closely divided on this issue.

This result is a minor upset: The market on the topic thought that most Manifolders would not believe in free will.

How does this compare to professional philosophers? When asked this question, the majority (not just a plurality) of philosophers endorsed compatibilism:

(Note: The values in parentheses are the percentages of people who exclusively endorsed an option, rather than selecting that option and another option)

Manifolders were also less likely than philosophers to endorse libertarianism (only 5% of people who didn’t answer “Other” or “Not sure”, and 8% of people who believed free will is a menaingful concept), though libertarianism is in the minority among philosophers as well.

Manifolders were a lot more likely than philosophers to accept “No free will”, but the most extreme difference was the number of philosophers who stated that the question was unclear or there is no fact of the matter (about 3.5% in total) vs. the number of Manifolders who chose the equivalent option on my survey. This is probably due to the influence of the rationalist movement, since a common belief in rationalist circles is that many philosophical debates are actually just debates over the definition of a word or something similar, and that they should be “dissolved”.

Although I just discussed the differences between Manifolders and philosophers, I would actually guess that, aside from the major discrepancy on whether “free will” has meaning, Manifolders’ beliefs are much closer to philosophers’ beliefs than the general public (although I don’t have data on the general public’s beliefs). I would imagine that the majority position among the general public is libertarianism, despite this being difficult to defend philosophically, and that most others would be either hard determinists or impossibilists. In other words, I think that the position that the majority of philosophers hold is very uncommon among the general public, mainly because of the misleading way the debate is framed (it’s often portrayed as “free will vs. determinism” despite this being a false dichotomy). Manifolders had far fewer libertarians and a lot more compatibilists than I would estimate the general public has, which makes them closer to professional philosophers in their beliefs, but still not the same as them.

What happens after you die?

This one had options submitted by a free response market. The options that were included on the survey were:

Nothing

SCP-2718

You get reborn.

You go to heaven or hell.

You relive your life over and over again.

You are resurrected by a superintelligence/advanced technology years in the future.

You hear an alarm clock ring as you wake up.

Other

Unsurprisingly, “Nothing” got the most votes, with 108 respondents picking that option.

(Yes, this would be a surprising result on some sites, but Manifold is not very religious).

The next most popular was “Other”, with 20 responses. But what I was most interested in seeing was the ratio between people who actually do believe in some sort of afterlife that is included in the options. The most popular view of the afterlife, aside from the view that there is none, was being resurrected by a superintelligence or advanced technology, with 9 people who selected that option. Once again, I cite the influence of the rationalist movement for this. After that were “You go to heaven or hell,” (8 responses), “You get reborn,” (4 responses), “You hear an alarm clock ring as you wake up,” (2 responses), and “SCP-2718” (1 response). No one answered that you live your life over again.

Newcomb’s Problem

My survey asked about Newcomb’s Problem, described as follows:

You are presented with two boxes. One is transparent and contains $1000, and the other is opaque. You know that the opaque box contains either $1,000,000 or nothing, and you are given the choice to either take just the opaque box (one-boxing), or to take both boxes (two-boxing). However, there is a catch: Before you make your decision, an extremely advanced device will be used to scan your nervous system and predict what decision you will make, and the contents of the opaque box are determined by its prediction. This device is very reliable: It has been used on many people performing the same experiment before, and has never been wrong. If the device predicts that you are going to two-box, it will put nothing in the opaque box, but if it predicts that you will one-box, it will put $1,000,000 in it. Should you two box or one box?

This is a very contentious issue among philosophers. A slim plurality are two-boxers, but a lot are still undecided.

Manifolders, on the other hand, have a much more definitive favorite.

I am not surprised at all that one-boxing won - I have always thought it was the correct answer myself, and I know it’s the answer that people on rationalist sites like LessWrong seem to agree on (plus, the market gave it an 81% chance of winning) - but I was surprised by how overwhelming the margin was. Almost ten times as many people chose one-boxing as two-boxing. I was also surprised at how few people were undecided. I thought there would at least be a large chunk of people who had never heard of Newcomb’s Problem before and might not come to a definite conclusion just from reading the statement of the problem.

The exact numbers were 129 one-boxers, 13 two-boxers, and 11 undecideds. If we only count people who knew what they would choose, 92% were two-boxers.

I guess if I ever have the opportunity to have either a random philosopher or a random Manifolder perform a Newcomb’s Problem on my behalf, I should be sure to choose the Manifolder (although even a two-boxer would agree to this).

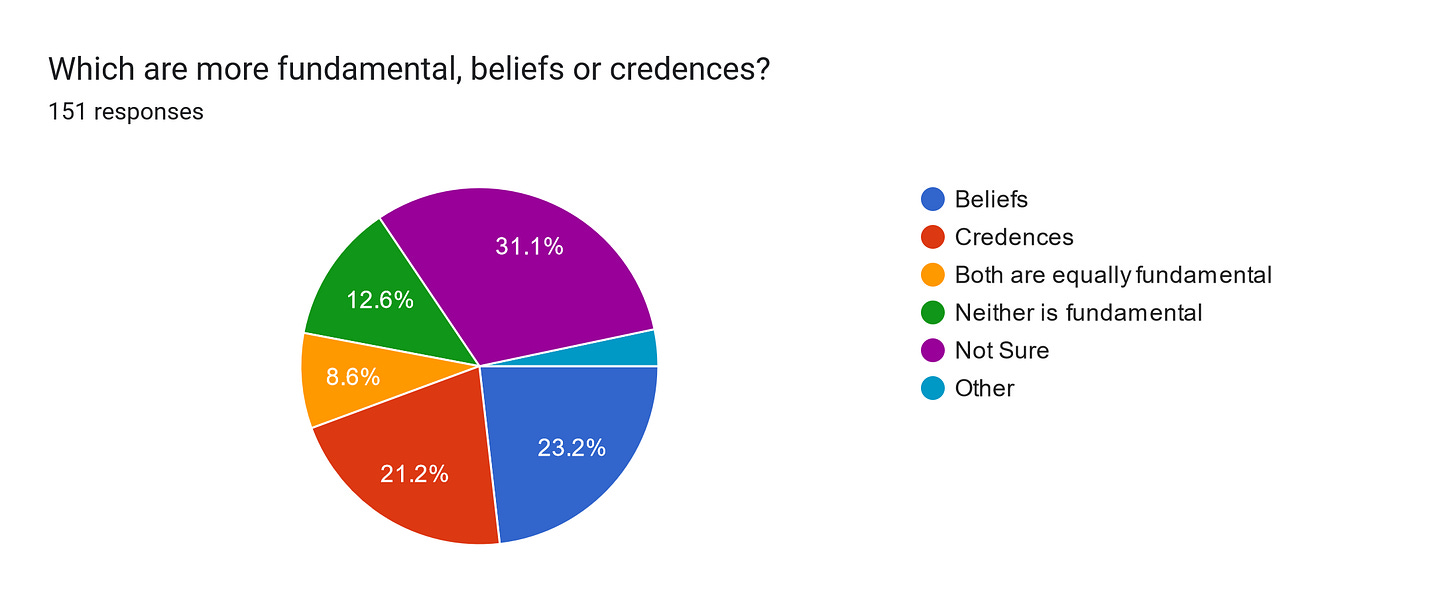

Which are more fundamental, beliefs or credences?

This one was actually inspired by the PhilPapers survey, where philosophers were extremely divided on the issue:

“Credence” technically had a slight plurality, but not a statistically significant one. So, did Manifolders have a clear choice? When asking this question on the survey, I included the following description:

A credence (sometimes called a degree of belief) represents how probable you consider a given claim, while a belief is whether you think a claim is true. Some philosophers or psychologists would say that beliefs are the more fundamental psychological concept, and credences just represent how confident we are in various beliefs or are derived from them in some way. Others would say that credences are more fundamental and that a belief is just a claim that we have a certain level of credence in.

Manifolders were just as divided on this as philosophers. The most common answer was “Not sure” (47 responses), but even out of those who had an opinion, there was an almost even divide between people who said beliefs are more fundamental, credences are more fundamental, and that both are on equal footing (either both fundamental or neither fundamental). 35 people went with beliefs, 32 for credences, 19 for neither, 13 for both, and 5 for “Other”.

Despite how close this one was, the market still managed to get it correct, and, appropriately for one that’s so close, it was not very confident.

I was interested to see how the rationalist leanings of Manifold would affect this question. On the one hand, the rationalist movement loves Bayesianism and believes that you should focus more on credences than binary beliefs. On the other hand, the question made it clear that it was asking about actual human psychology, not how rationalists believe we should think. Respondents seem to have understood that, given that they were just as divided as actual philosophers.

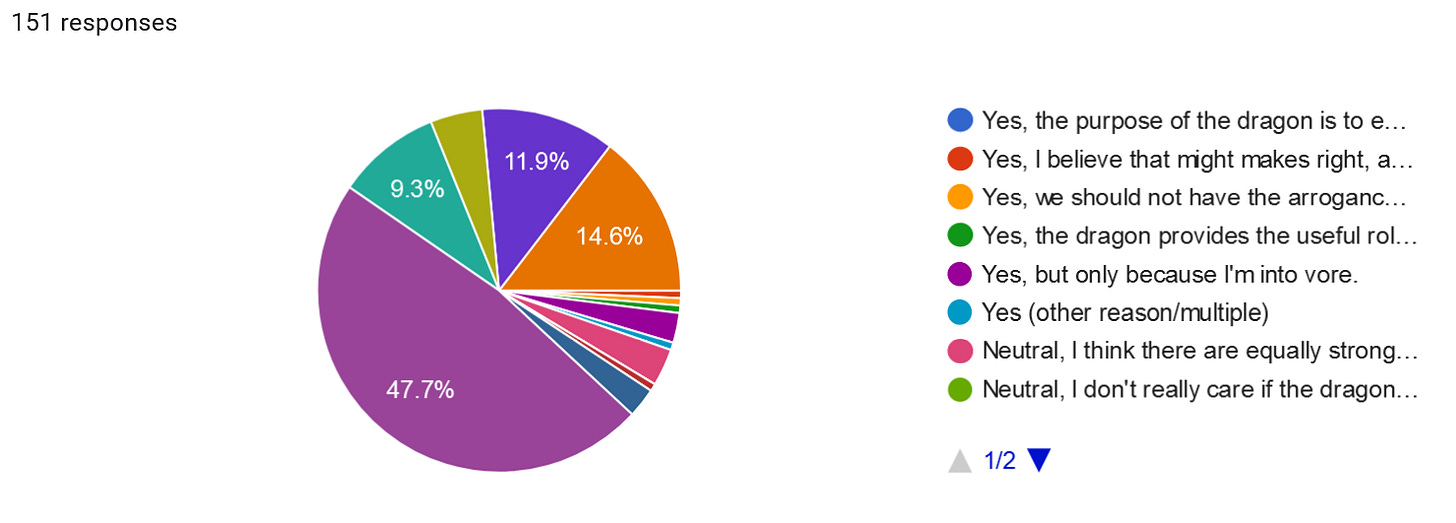

The Fable of the Dragon-Tyrant

This question was based on the Fable of the Dragon-Tyrant, a famous argument for life extension that uses the titular dragon as an analogy for aging. The question described the fable as follows:

Your planet is being terrorized by a giant dragon. Every day, the dragon demands that 10,000 people be given to it to consume. The dragon is so large and powerful that all previous attempts to defeat it have failed miserably, so much so that it has become accepted as a fact of life that the dragon simply cannot be vanquished. Because of this, philosophers have come up with all sorts of justifications for the dragon's existence to prevent people from despairing at their inevitable fate in its jaws. However, recent breakthroughs have found a way to pierce the dragon's scales, such that it may soon be possible to build a weapon capable of destroying the dragon once and for all. There is a great public debate surrounding this, with some arguing against this as an affront to nature. Do you take their side and support the tyrannical dragon?

I didn’t actually mention life extension in the question I asked, mainly because it was meant as more of a fun question than a serious one. The options were:

Yes, the purpose of the dragon is to eat humans, and our purpose is fulfilled most truly and nobly only in being eaten by it.

Yes, I believe that might makes right, and the dragon has the right to eat humans as he pleases.

Yes, we should not have the arrogance to try to change nature. Who knows what horrible things could result if we kill the dragon?

Yes, the dragon provides the useful role of preventing our species from becoming overpopulated. We need a dragon to eat us for the sake of the ecosystem.

Yes, but only because I'm into vore.

Yes (other reason/multiple)

Neutral, I think there are equally strong reasons for and against killing the dragon tyrant.

Neutral, I don't really care if the dragon eats me or anyone else.

Neutral, I am a fervent believer in the golden mean. Rather than considering these two extreme positions, I think that we should only kill half of the dragon while keeping the other half of his body alive.

Neutral (other reason/multiple)

No, the dragon kills millions of people each year, and we should do whatever we can to stop him, even if it is difficult and leads to some new issues that we will have to solve.

No, I don't want to get eaten by the dragon.

No, I want to save my loved ones from being eaten by the dragon.

No, imagine how cool it would be to kill the fucking dragon! Like, holy shit man, that would be so awesome!

No (other reason/multiple)

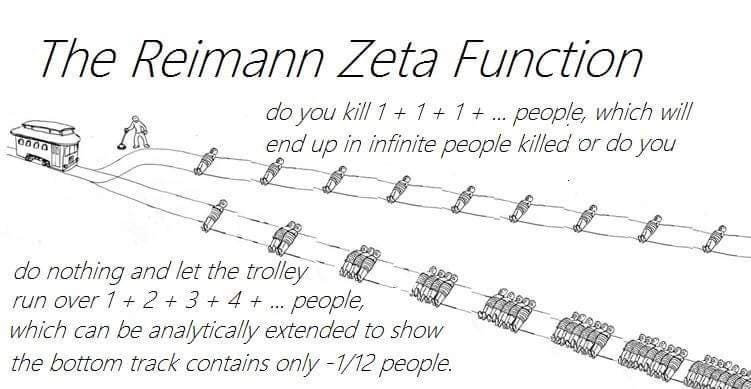

The first option there is inspired by a line from the original story. The third and fourth are meant to imitate the real-life arguments against life extension, although maybe I should have included an option of, “I’d get bored of living and want the dragon to eat me eventually,” since that’s another common one. And of course, I included some joke options, like the golden mean one, which was meant to mock bothsidesism. It was somewhat inspired by this installment of Manifold’s favorite webcomic:

The results are a bit hard to read off the graph, but here it is anyway:

The full results were:

No one answered the first option. I was surprised at first because I thought it was a funny line in the original story, but I think it just wasn’t as funny as the other joke options.

“Might makes right”, “Don’t change nature”, overpopulation, and “Yes (other/multiple)” got 1 vote each.

4 people chose “Yes, but only because I’m into vore.” In other words, the best way to get people to choose Yes is to have a joke option.

5 said that there are equally strong reasons for and against.

1 chose the golden mean.

4 chose “Neutral (other reason/multiple)”.

72 chose “No, because the dragon kills millions of people.” This is the big slice on the pie chart.

14 chose No out of self-interest (they don’t want to be eaten).

7 chose No to save their loved ones.

18 chose No because killing the dragon would be cool.

22 chose “No (other reason/multiple).”

In total, there were 8 Yes’s, 10 Neutral’s, and 133 No’s. In terms of percentages, this means that 5% of people supported the dragon-tyrant, about 7% were neutral, and 88% opposed him. No surprises there - the only thing that really surprised me was that there weren’t more “No (other reason/multiple)” responses. That was my response, since I would oppose the dragon-tyrant for all four of the stated reasons, even though some reasons are stronger than others.

Is there a fact of the matter as to whether the Continuum Hypothesis is true or not?

This is another one that was inspired by the PhilPapers survey. For those who didn’t know what it was, the Continuum Hypothesis is a question in set theory asking what the cardinality of the real numbers is. Cardinality refers the number of elements in a set (so, e.g., all natural numbers are cardinals), but the cardinality of infinite sets can also be defined. Two sets A and B have the same cardinality if there is a bijection between them: a function that sends every element of A to an element of B, such that no two elements of A are mapped to the same element of B, and every element of B has some element of A sent to it. This is just a formal way of saying that the elements of the set can be put in a one-o-one correspondence with each other, which is the obvious way to generalize the notion of “has the same number of elements as” to possibly infinite sets.

It’s a well-known result that not all infinite sets have the same cardinality - in fact, there are far more infinite cardinals than finite ones. The smallest infinite cardinality is called ℵ₀ (Aelph null), and it’s the cardinality of the natural numbers and of the integers. The next-smallest cardinal (and yes, it is provable that there is a next-smallest cardinal) is called ℵ₁ (Aleph one). The real numbers have a strictly larger cardinality than ℵ₀, and the Continuum Hypothesis says that their cardinality is exactly ℵ₁. In other words, it states that there are no sets that are intermediate in cardinality between the real numbers and the natural numbers.

If you are unfamiliar with the Continuum Hypothesis, you might wonder why there’s a philosophical debate over it. Isn’t it just a mathematical question that can be decided by mathematical proof? As it turns out, no. It can actually be proven that the Continuum Hypothesis is independent of ZFC, the standard system of axioms used as the foundation for mathematics. This means that it can neither be proven nor disproven in ZFC, and that leads to a philosophical question of whether it really has a truth value at all. One could argue that it does, and ZFC just isn’t a strong enough system to tell us what the truth value is. On the other hand, one could argue that the definition of a “set” is vague, and if our axioms for describing sets don’t give an answer to the problem, then it means that there is no definitive answer because it depends on what precise notion of a “set” you use. And then if you want to get really radical, you can claim that all of math is just a useful fiction anyway, and that “true” in math just means “provable in the axiomatic system we’re using,” so that CH is neither true nor false.

So what did Manifolders think of the question?

The majority did not actually know what the Continuum Hypothesis is, or at least, they weren’t sure whether it had a well-defined truth value. Out of those who did have an opinion, there was an exact tie: 27 people said there is a fact of the matter, and 27 said there isn’t. Out of those who said there is a fact of the matter, 8 said it was true, 1 said that it’s false, and 18 weren’t sure which. I’m surprised that there were so many more people who said it was true than that it’s false, since there doesn’t seem to be a clear consensus among mathematicians that one answer is more likely (unlike, for example, P vs. NP, where almost everyone agrees that probably P ≠ NP, even though no one has found a proof), though we’re dealing with such a small sample size that it could just be a coincidence.

The market on this one was technically wrong in terms of the absolute prediction, but I actually think it did a very good job predicting the result. It closed at 52%, which seems like a very appropriate amount given that the actual opinions were perfectly evenly divided. The only reason it was “technically wrong” is because I asked whether a majority of respondents with an opinion would choose Yes, and exactly 50% isn’t a majority.

For comparison, here’s how philosophers answered this question:

As we can see, the majority of philosophers with an opinion thought the Continuum Hypothesis does have a determinate truth value, but it was by no means a strong consensus. This is a question that’s unfamiliar even to many philosophers, as you can see from the record of the sample size at the bottom, and from the number who know about the question but are still undecided.

Ontology of Time

The next question was about ontology of time. As it was described on the survey, “Ontology of time describes your views on what exists, in the most unrestricted and absolute sense of the word.” There are three fairly common views of ontology of time, which were all options on the survey:

Eternalism: Past, present, and future all exist.

Presentism: Only the present is real. The past and future do not exist.

Growing block: The past and present both exist, but not the future.

I also included “Other” and “Not sure” options. How did Manifolders answer?

Eternalism was by far the favorite, probably because it’s the correct option. Growing block was slightly favored to presentism, but there were more people who aren’t sure than there were who chose either of those options. In total, there were 58 eternalists, 28 growing blockers, 22 presentists, 10 who have some other view, and 30 who aren’t sure. If only the people who are sure are counted, then this gives us 49% support for eternalism, 24% growing block, 19% presentism, and 8% other. In yet another accurate prediction from the market, the question of whether eternalism would get majority support among those with an opinion closed at 50%, a reasonable estimate given that the result was extremely close, with it coming just shy of a majority.

Manifolders’ opinions on this one are also very close to those of professional philosophers:

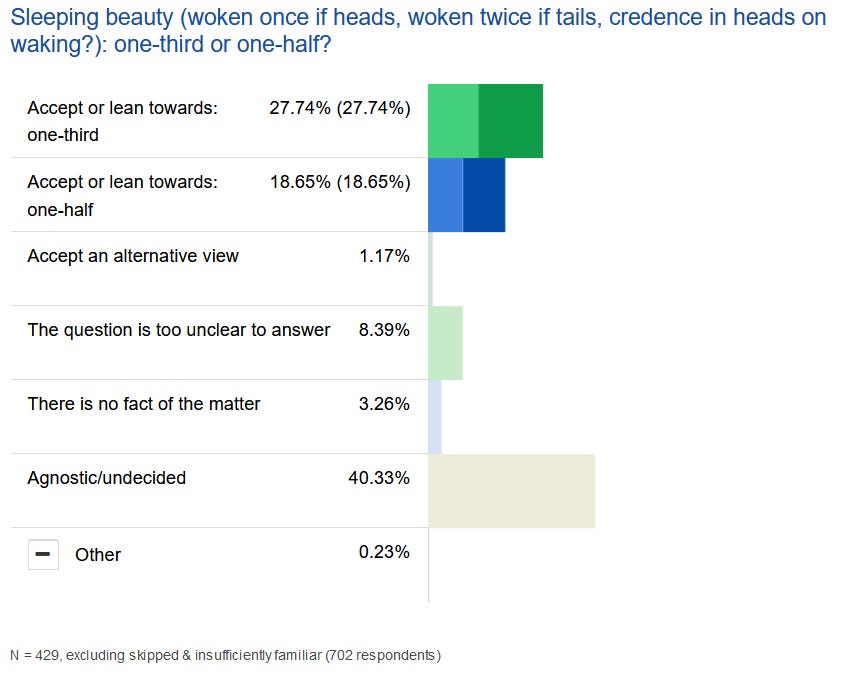

Sleeping Beauty Problem

The next question was the Sleeping Beauty Problem, a famous problem in anthropic reasoning. I explained it on my survey as follows:

Sleeping Beauty agrees to undergo an experiment and is told the following details: On Sunday, a fair coin will be flipped, but she won't be told the results. She will then be given a drug that puts her to sleep until Wednesday. If the coin lands heads, she will be woken up once on Monday and asked how likely it is (specifically, what her credence is) that the coin landed heads. She will then take the drug again, which also induces amnesia so that she forgets the interview, and sleep until Wednesday. If the coin lands tails, she will be woken twice, once on Monday and once on Tuesday, to be asked the same question, before taking the drug again each time. When she is interviewed, she won't be told what day it is, and because she took the drug, she won't remember any previous interview. Suppose Sleeping Beauty is an ideally rational agent and has just been woken up for an interview: What should her credence be that the coin landed heads, and what should her credence be that it is Tuesday?

The options were:

1/3 chance that the coin landed heads, 1/3 chance that it is Tuesday

1/2 chance that the coin landed heads, 1/4 chance that it is Tuesday

1/2 chance that the coin landed heads, 1/2 chance that it is Tuesday

1/4 chance that the coin landed heads, 1/2 chance that it is Tuesday

The problem is ambiguous, so there is no unique correct answer

Other

Not sure

The first two options correspond to the traditional thirder and halfer positions. The third option is meant to represent the double-halfer position, which claims that there’s 50% a chance of the coin having landed heads, both unconditionally and conditional on it being Monday, and generally would also endorse the idea that there’s an equal change of it being Monday or Tuesday (this was a slightly imperfect way of representing the position, but I think pretty much all double-halfers would agree to the third option). Of course, this runs afoul of classical probability theory, since a simple calculation shows that it’s impossible for the probabilities to all be 1/2, but double-halfers claim that the normal rules of probability don’t apply here, usually based on the idea that the probability is context-sensitive in some unusual way that prevents direct comparison.

The fourther position is one that I completely made up just to round out the answers (specifically because I wanted to have more than two answers in a multiple choice market I made on the probability of the coin having landed heads). I’ve never heard anyone endorse it, nor have I heard of any probability assignment rule that would lead to it.

These were Manifold’s responses:

The most popular answer by far was 1/3, with 47 people choosing it. The halfer position had 18 responses, double-halfer had 7, fourther had 1, ambiguous had 19, and other had 12. There were also 37 people who weren’t sure. Excluding them, there were 45% thirders, 17% halfers, 7% double halfers, 1% fourthers, 18% ambiguous, and 12% other.

For comparison, here’s what philosophers think:

This was another one where Manifold was pretty close to professional philosophers. Both groups favor the thirder position over the halfer one, but also have a significant minority that think the question is unclear or has no fact of the matter. Interestingly, Manifolders were more likely to have an opinion on this than the philosophers on the PhilPapers survey, even though a lot of philosophers skipped the question because they weren’t familiar with it. Out of all the questions I looked at, this one had by far the largest percentage of undecided philosophers.

Ethics

The next question used checkboxes and asked, “Which meta-ethical views do you agree with?” (though technically speaking, the first three are actually normative ethical theories). The options were:

Consequentialism (Moral behavior is that with the best expected consequences. This can also include forms of rule utilitarianism where the consequences of following a rule, even when it has bad consequences in some situations, are better than deciding on a case-by-case basis.)

Deontology (Morality is based on following certain duties/rules that determine whether an action is right or wrong regardless of the consequences.)

Virtue ethics (Morality is based on behaving in a way that embodies certain virtues, not the consequences or intrinsic rightness/wrongness of actions.)

Relativism (There is no objective fact of the matter as to what is and isn't moral, or morality is based on one's personal or cultural standards.)

Objective morality (There is an objective fact of the matter as to whether certain actions are right or wrong.)

Moral absolutism (Some actions are wrong in all possible circumstances.)

Non-cognitivism (Statements about morality are not actually propositions with truth values, e.g., they are ways of expressing approval or disapproval, like cheering or booing.)

Moral nihilism (There is no such thing as morality.)

I hold to a different meta-ethical theory than the ones described here.

Not sure

Unsurprisingly, consequentialism was by far the most popular normative theory - this was predicted by the market on the subject. It was also the only view to get majority support. Interestingly, adding up the votes for all three normative theories gives us 144 people who selected one of them, out of 145 total. This makes it sound like almost everyone believes in one of these three options, but actually, there were a lot of people who selected more than one of them. I assume this probably represents that they have a view of normative ethics that shares similarities to multiple of the listed ones, or that they are unsure but deciding between the options selected.

A lot more Manifolders believed in relativism than objective morality, but neither option had majority support, meaning a lot of Manifolders are undecided on this issue (or perhaps some think that it’s too nuanced to fit in a binary objective-subjective distinction). In fact, there are more people who selected neither objective morality nor relativism than either option had individually.

Moral absolutism and moral nihilism were the least popular views. Surprisingly, some people selected moral nihilism while also selecting other statements about morality. I’m not sure how to interpret that.

How do Manifold’s views compare with philosophers? Philosophers are extremely divided on which normative theory is correct, though most endorse one of the three I listed:

Compared to philosophers, Manifolders are much more supportive of consequentialism and much less supportive of deontology.

No question on the PhilPapers survey directly asked “Objective morality vs. Relativism”, but this one is more or less the same thing:

(Although according to this article, not all accounts of moral realism require morality to be objective as part of the definition, so it’s not a perfect stand-in). As we can see, philosophers support moral realism by a large margin, making them very different from Manifolders on this issue.

There also wasn’t a question specifically about absolutism, but there was one about non-cognitivism.

It looks like non-cognitivism is about as popular among Manifolders as philosophers.

I also didn’t find a question that mentioned moral nihilism by name, but I did find one that mentioned error theory, which had only about 5% support, so not too far off what Manifolders think.

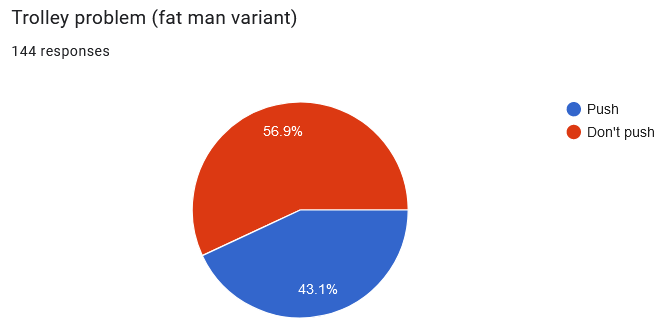

The Trolley Problem

Possibly the most well-known problem in all of philosophy, thanks to all the memes, is the trolley problem. There are already markets about the two most well-known variants on Manifold:

Unfortunately, these markets aren’t that good at gauging opinion on the questions because they use the dark and forbidden resolution technique: Resolves to probability at closing. So, I decided to use my survey as a more accurate method of judging opinion.

On the classic variant, I didn’t see any reason to explain the problem because, at this point, who doesn’t know it? So the description just said, “We all know it. Do you pull the lever?” Unsurprisingly, the vast majority said yes.

Philosophers weren’t that far off.

I actually found it surprising that only 63% percent of philosophers said we should switch the lever. I would say something like, “Never underestimate philosophers’ ability to disagree on anything,” but… I’ve seen the results of the PhilPapers survey. There really are some issues that philosophers are in near-unanimous agreement on. Oh well, I think we can all agree that this two-year old has the real solution.

I described the “fat man variant” as follows: “You are on a bridge above the trolley tracks. The only other person on the bridge is a large man. You see the trolley on the tracks below, soon to hit five workers. However, the man with you on the bridge is big enough that his body would stop the trolley if you pushed him off the bridge in front of it, saving the five workers. Do you push him?

I wasn’t sure what Manifolders would say about this one until I saw the results. A majority chose not to push the fat man, though it was fairly close.

This is kind of surprising given that a majority of Manifolders claim to be consequentialists, but it’s possible that some people answered “Don’t push” not because they believe it would be wrong to push, but because they don’t think they would actually be able to bring themselves to do it. It’s also possible that people had a consequentialist justification for not pushing the man (e.g., maybe pushing him would violate one of the rules of a rule consequentialist theory).

Philosophers also had majority support for not pushing. About the same proportion of philosophers answered “Don’t push”, though the proportion that answered “Push” was smaller than the proportion of Manifolders who answered the same (philosophers had the option of taking cop-out answers).

The discrepancy is probably explained by the higher popularity of consequentialism on Manifold.

The final variant I included was a self-sacrifice variant, stating, “Now you're the fat man, and there's no one else on the bridge to push you off. Do you jump in front of the trolley to save the five workers?” This wasn’t on the PhilPapers survey, nor is it a common variant I’ve heard elsewhere. I just wanted to find out how willing Manifolders are to sacrifice themselves, especially compared to the result of the previous question.

So, Manifolders are less willing to sacrifice themselves than the fat man. One could view this as proof that Manifolders are selfish. Alternatively, one could view it as proof that Manifolders are honest.

Aside note: While looking for the two-year old video, I found a different one that actually answers all three trolley problems: https://www.youtube.com/watch?v=Qfn9Jl6TIRA Clearly, this kid knows her philosophy.

The Fermi Paradox

The next question I asked was, “What is the solution to the Fermi paradox?” I have always found the Fermi Paradox strange because I don’t understand why it’s considered paradoxical (it’s only a paradox if you assume aliens are common, which we have no evidence for). But regardless, I like hearing the ideas people come up with. Here are all the responses:

"Aliens" are not life forms from other planets, they are already here

42

A very large distance and/or we are one of the first ones

AI

Advanced liffe is highly unlikely & we are the early ones

Aliens are just really far away, man

Aliens can't get here or don't care

Aliens don't exist in this universe

Aliens technologically developed enough to cross the distance between solar systems likely have sufficient technology to avoid detection, and no need to take the risks associated with direct, discernible interaction. Even if there are aliens, we should be expected to not realize it or observe them until or unless our technology eclipses -- or at least approximates -- theirs..

Civilizations tend to extinct themselves; Each one experiences being the "only" one because of anthropics

Confidence interval which encompasses no aliens (Sandburg et al)

Creation of life is hard, evolution of intelligence is hard, surviving is hard when you have nukes and bioweapons, the galaxy is big, and light is really slow.

Dark Forest

Difficult to evolve species are that are able to go to space

Distance

Don't know

Early grand filter (going from smart animal to early civilization)

Error bars on the calculation. Or Anthropic principle because aliens kill us.

Extreme difficulty of prebiogenesis

Filter e.g. abiogenesis

Grabby Aliens

Grabby Aliens

Grabby Aliens

Grabby Aliens

Grabby Aliens is the current best model. Obviously missing pieces, but the idea it talks about probably has some effect.

Grabby aliens

Grabby aliens

Grabby aluens

Great Filter

Great Filter.

Great filter is ahead of us (probably climate change or nuclear armageddon)

Great filter is ahead??

Hanson's Grabby Aliens model and/or we're (close to) alone

Have you ever played rugby?

Humans are early, *and* AGI doesn't result in high cap superintelligence, *and* cultures do not remain genocidal colonizers when they do achieve moderate superintelligence.

Humans arose about as early as possible in the universe.

I don't know

I don't know

I don't know, it's got a lot of fat assumptions going on, so I'd say too many breaking points to know now

I won't tell :)

I'm not sure! I haven't thought deeply about it

Intelligent life is extremely rare.

Intelligent life is far rarer than thought.

Intelligent life is rare

Intelligent life is rare and fragile

Intelligent life is rare because it is only by extreme luck that it evolves in any given planet.

Interstellar civilizations are possible and have arisen, but the origin points of their communications have been too far away to distinguish them from background noise.

Interstellar jouney are much more difficult than most think.

Interstellar travel is much worse than it seems + we are early + habited places are actually farther apart than suggested

It doesnt matter because we wouldnt exist to observe the fermi paradox if it was false. Any solution is equally valid.

It's not actually a paradox Monte Carlo simulation indicates that there's a 30% chance of us being the first in our bubble

It's too hard for aliens to build dyson spheres or expand beyond their solar system or other things that would be easy for us to detect.

Life is rare and Interplanetary colonization is really hard

Life is rare and fragile

Man, I thought it was just a thought experiment. No "solution". Need more evidence.

Most likely: Some version of the great barriers theory. Intelligent life arising is just incredibly unlikely. But if aliens do exist, I think the most likely possibility is a certain variant of Dark Forest that I believe in (too long to explain here).

No aliens

Not advanced enough technology.

Not hit great filter yet

Our planet likely does not contain forms of consciousness that are capable of interacting with other consciousnesses in this universe in a personally meaningful way

Rare Earth

Sapient evolved species that are intelligent enough to make itself known blows itself up first

Sapient technological alien life is absurdly rare, rarer by far than [single-celled/multicellular/presapient] life. They're out there, but we won't meet them for another billion years or so.

Single-celled life is common, but complex multicellular life is extremely rare

Space is big

Superlinear scaling leading to a singularity

THE ZOO HYPOTHESIS

The dark forest

The great filter is a combination of life being hard to arise plus technological self-annihilation.

The probability of extraterrestrial life interacting with us is rather low, and we haven't done much to actively search for evidence, especially outside of our own solar system

The universe is a simulation designed to create me. Nobody else matters, and aliens aren't worth the compute to simulate.

The universe is so vast in both space and time such that even if other intelligent life comparable to humanity exists (existed, will exist) it would be unlikely for us to find it.

The universe is too young

There are no intelligent aliens in the entire galaxy, advanced life is just too rare

There aren't any aliens. God made humans, unique in His image. The human zoo hypothesis is kinda silly

There is no paradox. Either life is extremely rare in the universe, or it's extremely rare for it to evolve into intelligent life. Since we have no reason whatsoever to expect intelligent life to be common, and a lot of reasons to expect it to be extremely rare, there is nothing to explain about this state of affairs.

There is other intelligent life in the Universe, but it is so unfathomably far away that we will never come into contact with it as a species without tremendous advancements in suspended animation and a plethora of other problems that need to be solved before we can even consider attempting to answer a cosmic booty call.

There used to be aliens, but one time I got really hungry. Sorry 😔

They are there and we don’t see them.

They cannot be bothered with us until we pass some stage

They're not out there. Complex life is unlikely enough that in our universe that it's only happened once so far.

Too hard to travel between stars

Top candidates are simulation hypothesis, AI doom in a way that doesn't send paperclippers outward, and rare Earth

Uncertain, if i had to guess, "aliens are hiding" seems most likely, or maybe a "grabby aliens" anthropic-type argument

Uncertainty is too large for firm conclusions

We are early

We are grabby aliens

We are one of the first civilization so, we don't see anything. Plus, the conditions of life are quite hard.

We didn't look very hard for life, and other life isn't trying very hard to meet us (seeing as we're primitive and have nothing to offer them)

We're [almost] alone; this is not a paradox.

We're blind.

We're early in the universe.

We're the first ones to the party

We're the grabby aliens

Who knows. Wasn't it supposed to be 'dissolved' or something?

civilisations are sparse across time and space

cosmic quarantine

dark forest theory

dunno

high distance, no reliable radio transmission / sensing pair that we've come across

huh? distance? time?

idk

idk bro

intelligent life is rare and advanced civilizations that do exist don’t emit lots of technosignatures

i’m not sure what that is

just decrease the temperature of the room

life is rare or "grabby aliens"

life is rare, earth is young

life is very unlikely to appear / great filter that we likely already passed

look harder idiots

something something lightcones

speed of light and theoretical technological limitations will prevent any alien species from making contact

there are aliens we just dont have the ability to detect them. they are interdimensional beings

you tell me

¯\_(ツ)_/¯The most popular response was some variant of “Aliens are rare,” or even that they don’t exist at all. Some people gave more details on what, specifically, they think is rare: Is it life as a whole that’s rare, just intelligent life, or just advanced civilizations? People also mentioned the difficulty in detecting them due to large distances and equipment that isn’t powerful enough. This is related to the “They’re rare” theory, since exactly how rare aliens have to be to explain their absence depends on how close they would have to be for us to be able to detect them, and vice-versa.

Some suggested that, rather than distance in space, it’s distance in time that’s the problem: We’re the earliest ones.

A lot of people also said that, even if aliens are around, they might not want to contact us. Many specifically cited the dark forest theory as the reason. Others pointed out that we shouldn’t expect aliens to be similar to us. They may not be interested in exploration or meeting other intelligent species, and they might be so different from us that they can’t even meaningfully communicate or interact with us (As Wittgenstein said, “If a lion could speak, we would not understand it.”). Some people also pointed out that aliens could easily keep themselves from being detected if they wanted to.

A lot of people mentioned the “grabby aliens” hypothesis. This is the idea that some alien species expand outward and prevent new civilizations from developing in the places they expand to. The idea here is an anthropic explanation: If there were any aliens close enough to us for us to see them, they’d have most likely taken over the Earth before human civilization could develop, preventing us from existing in the first place. So observing a universe without aliens is typical for young civilizations.

Many also mentioned the possibility that intelligent civilizations wipe themselves out as a great filter, with a few respondents citing AI as a possible method. There were also a lot of responses that just said “great filter” without elaborating on what the filter is. I’m pretty sure this is equivalent to saying “I don’t know.”

As a bonus, the responses that made me laugh were, “There used to be aliens, but one time I got really hungry. Sorry 😔,” “just decrease the temperature of the room,” and “look harder idiots.”

The Multiverse

The next question I asked was whether the Multiverse exists. On the one hand, the Many Worlds Interpretation is popular in rationalist circles (although it could be argued that that’s not really a multiverse). On the other hand, there’s no direct evidence for it. What did Manifolders think?

Almost half were completely agnostic, but overall, more people at least leaned towards a multiverse existing than not existing. The exact numbers are 8 for “Almost certainly not”, 14 for “It likely doesn’t exist”, 71 for “I’m completely agnostic,” 34 for “It likely exists,” and 22 for “Almost certainly”. If we treat those responses as a linear scale going from 0 to 100%, the average response is about 58%.

AI Alignment

The final question on this section asked for general opinions on the AI alignment problem (in the description, “E.g., How serious of a problem is it? How hard will it be to solve? Have we already solved it/do you think you have a solution? Is it an existential threat? Are misaligned AIs already causing major problems?”) Here are the answers:

"AGI" is not so near. "AGI" will not become super-intelligent fast. Super-intelligent is not a superpower. If humanity survive other threats, at some point AI will be significantly superior to humans but that don't mean they will kill us all.

9/10 serious, could be hard to solve, 50% to eliminate us

90% everyone dies, 8 years median timeline with <10% probability it takes more than 20 years

AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA (but no major problems from current tech)

AI existential concerns are overblown. We are not going to get some kind of superhuman intelligence that goes rogue anytime in the next 100 years. On a simpler level, of AIs simply not doing exactly what we want them to do, it is a problem but it's a more mundane one than most people think. In fact, we already face the alignment problem everywhere, in our institutions. We have elected and non-elected people (not just in the government, but all kinds of people who we give power over us) who's values don't exactly align with ours. In economics it's called the principal-agent problem. It certainly causes many issues, but it's not new and it's not going to cause societal collapse.

AI misalignment is the greatest x-risk and only significant s-risk we are currently facing. P(doom) ~= 15%

Alignment is only necessary to the extent that we rely on AI, E.G. if we somehow decided to outsource writing legislation to AI. I still hold the view that AI is far more likely to harm via incompetence (see previous sentence) than malicious competence. I think that more marginalized people in tech and more “social education” in tech would work wonders for alignment (and yeah, I concede that it’s also a technical problem, but still!)

An AI that gains sentience will probably be aligned by default - life created by humans will probably be human-like. Efforts to prevent AI from being racist etc. are actually helpful, because they help the AI develop some degree of empathy. There is no single solution. It is probably not an existential threat; humans misusing AI is more likely to kill us than a misaligned sentient AI is.

Attempting to solve it will ironically make the problem more severe

Broadly Yudkowskian, though with significantly less certainty.

Cant be solved.

Disaster is possible as always, higher risk than usual, credence low because the robustness arguments by doomers are weak

Discussion around this topic tends to be too vague to be interesting, and most expressed views, thoughts, or opinions are not very useful.

Either it is easy to solve or nearly impossible, any major effort over >1B dollars is likely inconsequential to the outcome.

Existential threat for humans as we know them. There is no solution, because our true goals cannot be formulated perfectly (they do not exist). The "alignment" task that we should work on is the same as: How do we "educate" our children to best follow our ethics? Fully controlling them is impossible. Children are also an existential threat for the goals of their parent generation.

Existential threat of 20% before 2040, 50% before 2100, assuming humans are not existentially impacted by things unrelated to AI development.

Extremely serious. Very hard, we're mostly doomed, but there's still some room for us to "get lucky" and have it be easier than thought. It's an unsolved existential threat. Misaligned AIs are causing minor problems at worst so far; things like facial recognition are having worse impacts. The fact that current attempts at "alignment" are often "make the model not do a PR disaster" and also make the models more boring and less-calibrated is not promising. Our current routes to survival seem like they might flow through an early-stage catastrophic industrial accident, and that's bad.

Feels completely out of my hands so I will not waste what life I have left worrying about it.

Hard problem, existential threat, present-day issues are overblown

Hard, big existential risk.

Has some merit, but we are quite far away from any meaningful implementation.

Humans don't even have alignment. What would align AI to? It is unsolvable. It is an existential threat just like nuclear weapons. Mutually assured destruction of at least 2 separate and superpower AI systems is the only chance we have. Like with nukes

Hyped for the matrix irl

I agree with Eliezer

I am surprised that we can align AI at all, and the fact that ChatGPT is aligned enough to be considered safe enough for children (or teachers at school) gives me hope its solvable enough for a basic AGI to not kill everyone. ASI not yet.

Bad actor with AGI is most likely end scenario.

I believe with intelligence will come kindness more often than not so not super worried.

I can't do anything about it so I try not to think about it

I certainly hope it would be solved.

I do not know enough to comment.

I don't think AI is as serious or immediate a threat as global warming, poverty, pandemics, or war.

I don't think a full "doom" of humanity is likely, though a massive, potentially strange/undesirable change in human society is likely.

So, I think it's good and most likely productive to work on AI alignment (not sure how efficient it is), though there seems to be less focus on s-risks than I would prefer.

I don't think we have solved AI alignment yet and there are problems we aren't aware of now.

I don't think misaligned AI is a major problem now.

It is a large problem in that it will change humanity majorly.

It will be hard to solve IMO.

I am looking forward to it.

It gives us meaning in this transitional period.

im so tired sry for the nonsense

I don’t think its very likely that an AI will end humanity (my p(doom)=2%) but it’s a large risk that people should be working to ameliorate. I’m not sure how difficult it will be align super intelligent AI but I think the solution will likely depend on the details of its implementation that are difficult to speculate about. Current AIs are not powerful enough that misalignment is a large problem, mostly current misaligned AIs cause embarrassment for the companies creating them. I do think that current AIs are powerful enough they can assist bad actors doing bad things.

I doubt it will get solved.

I literally converted to Christianity to oppose unaligned AI, so I would consider it the most important problem that we currently face.

I think a lot of people drastically underestimate how long we'll have to solve it, and drastically underestimate how bad it'll be and how hard it'll be to solve in full.

I think it is a very surmountable problem that we are simply not dedicating enough resources to solving.

I think it will be hard to solve but unsolved will not necessarily be as big a problem as some make out.

I think it's pretty worrying. I'm not convinced that the default AGI will kill us - I still kinda feel like having a neural net predict CEV should "just work" in some sense - but I'm not very confident at all. I think society should consider pauses on AI development in 5 years or something if we haven't solved alignment.

I think the strong form of the problem is quite tough to fully resolve, but I hold lower credence on the likelihood of bad outcomes and also assign different values to both the positive and negative cases such that my preference is for ripping. I think the AI Alignment problem is nerd catnip and that's the primary reason rats are obsessed with it.

I think we will see it coming.

I would be surprised if the AI didn't kill everybody by 2035.

I'm completely unconcerned with this problem. We can't create an operating system without vulnerabilities, so there's absolutely no hope of ever reigning in AI. Is it an existential threat? Being alive is an existential threat, kill yourself if you're sincerely worried about this.

If AI is smarter, it will choose the best option. Don't really see a problem with it

If AIs can become hypercapable quickly, we're doomed, because it will likely be superdestructive before it can be aligned. If AIs can become superintelligent but only slowly, then our best bet (but by no means a sure one) is other AIs working in their self-interest preventing a single one taking over. If there's a not-very-high bound on how intelligent an AI can get, then stakes and difficulty will be comparable to the existing task of aligning humans.

If the world gets destroyed by AI it will be my fault. Nobody else matters.

Impossible to solve, not actually that serious

Irrelevant problem

It is a very serious and difficult problem, and failing to solve it presents an existential threat to humanity.

It is already a hard problem and will be more harder as new models will keep coming. Whenever we have highly capable AI systems we have to reset everything and start again from zero or from the beginning i.e before GPT 3

It is an existential risk. No clue how a solution could look like. I hope we still have time because neural nets might not be the whole answer still.

It is overrated in my opinion but nonetheless important.

It is serious but not an existential threat. The solution is to solve specific problems as they come. Misaligned AI is not yet causing major problems.

It is serious, but nothing to worry about for a long while. Current AIs are great at talking but have no long term memory and no intrinsic motivations

It will kill us all

It's a problem and also not even well defined

It's a problem but i'm not sure it's as important as the ethics of AI use by humans

It's a serious problem, which will be difficult but solvable. Whatever the solution is, it must work even if all models are open source, since otherwise we'd just be a single datacenter leak from a potential extinction.

However, I'm much more concerned about the potential harms aligned AIs can end up causing, since I'm fairly confident we'll be able to solve alignment. Examples would include much more deadly wars (I'm not sure MAD works for AI-driven weapons used for conventional warfare), monopolization of most/all economic activity or even a new wave of imperialism, which could happen if only a few countries manage to develop AI and keep everyone else from using it.

It's a very serious problem, and I doubt we're close to solving it though it's hard to prove. It is by far the biggest existential threat we face. Once AIs get powerful enough to actually do dangerous stuff if they are misaligned, shit will get real. I think our best chance involves using AI a lot in some manner to help us with alignment.

It's evolution. I'm completely agnostic to the problems. What happens, happens.

It's serious, we're fucked.

Its a serious problem. terminator 2 judgement day get rdy

It’s an existential threat and very hard to solve. I expect we’ll ameliorate it with bandaids and cautious progress. Fingers crossed.

Misalaigned AI is an existential threat. I think it's less pressing than engineered bioweapons, but I'm not an expert on either subject.

Misaligned AI already exists and causes problems, just not existential ones. I think it's very unlikely that AI will wipe out humanity in the next century or lead to some similar existentially horrible scenario, but given what's at stake, it's still worth devoting some resources to ensure it doesn't happen. Plus, since the smaller-scale consequences of misaligned AI are likely to become more and more common, we ought to find ways to avoid it anyway. I have no idea what the solution to the alignment problem is, and I don't know if it's even possible to completely solve it.

Misalignment will pose a problem when trying to use LLMs to "replace human labor," as many humans will still be required to supervise and correct output. This problem is sufficiently well understood by the people who would be implementing those LLMs that it would take a *monumentally* stupid series of policy missteps to turn it into an existential threat. (United States military, please do not put AI models in your targeting computers.)

Mostly a problem for general AI which is very far away

Mostly irrelevant

Need a way to encode values; solution near at hand

Not an existential threat, but possibly still fairly serious.

Not an existential threat.

Not serious, but probably can't be solved. Aligning AI has the same

P(Doom) = ~3%

I don't believe in AI overlords as I don't believe AI will upgrade itself infinitely, it will reach a limit at some point and I think it'd be sooner rather than later. I find it unlikely that AI will make it it's objective to destroy humans and most of my doom position comes from AI skewed values killing humans as a collateral to some objective and humans using and training AI to cause destruction.

Right now it's humans that are causing problems where AI is a tool and we cannot expect a useful tool to be perfectly aligned. It's like asking a hammer to be unable to break a bone. I guess even if we never reach AGI, this tool could become the next nuclear bomb. It's too soon to know how difficult solving alignment is, as we are far from both understanding/aligning current systems and creating AGI. As a person working with AI, I don't expect alignment to ever be fully solved.

Seems easier to solve now then when I first thought about it, ten years ago. Seems potentially dangerous, although the rationalist community uh... sometimes takes actions that may be counterproductive.

Seems hard and definitely would be an existential threat if we had agentic AI but we don't and the simulator argument is unconvincing.

Seems very bad, try not to think about it too much, don't want to lose my friends because I become radicalized by x-risk worries. (until of course I lose my friends to x-risk itself, but I'll be blissfully unaware)

Serious, hard to solve

Serious, hard, no, yes, not really

Serious. Hard. No. Maybe. No.

Severity and difficulty both maybe 2-3 times that of something like current security

Somewhat; not that hard in the abstract but humanity will likely shoot itself in the foot; yes; probably not; no.

The orthogonality thesis is wrong and therefore much of the discussion in 'AI risk' circle is useless. There will be risks, conflicts, probably wars but it will be a lot more mixed and messy than singulitarians think and won't be "AI vs humanity".

Sounds like something for the assholes in charge to deal with

Sure seems like a non-negligible threat, but my intuition would be that it probably ends up being a solvable problem in practice.

TAI will exist: ~95%

Conditional on TAI: p-extinction: ~20%, p-blursed: ~60%

There's a low (but far from 0) chance that AI will kill us all, and thus it is an important problem. We don't seem close to solving it, and I have no idea how such a solution would even look like, which means I don't know how hard the problem is.

They are not currently causing problems; whether they cause problems will be almost entirely dependent on what systems we choose to automate; we will doubtless automate systems that "shouldn't be automated" (AI will make "the wrong decisions" with serious harm); however, recursively-improvement AI is not a problem, and we will not see "accidentally created agency".

Very Serious, very hard. It needs attention. We will likely solve it

Very bad

Very serious problem, hard to solve, existential threat, but present issues overstated.

Very serious problem, very hard to solve. I don't have a solution. Yes it's an existential that. Not causing major problems yet

Very serious problem, we don't have a solution, seems pretty hard to solve, but it's hard to tell from here. Is an existential threat. Currently not causing major problems, though causing some small problems.

Very serious problem. It'll be hard to solve but we'll manage it. It is an existential threat but a very low-probability one. Misaligned AIs are not yet causing major problems.

I'm most worried that AI will empower everybody, including crazy people. Like if it's easy to build an AI that will tell you how to engineer a bioweapon from easily obtainable ingredients, somebody is definitely going to unleash that. Also worried about authoritarian governments. But I don't see these as AI alignment issues directly.

Very serious, very hard to solve, existential threat.

Very serious.

Very hard, especially since AI companies are not particularly trying. Open ai has some stuff they are trying but they seem to view the problem as massively easier.

We have not solved it.

Yes, something smarter than us that also thinks faster is a threat if it has misaligned values.

In a way they are but only very loosely 'misaligned', because the current AIs are closer to mice than general intelligences.

Very serious. Hard to solve.

Very serious. IDK. Apparently we haven't. Yes. Not that I have seen.

We are doomed, we barely know how we'd even approach solving the obviously terrible problem.

We are likely fucked.

We could easily all die

We have no idea whether it's an existential threat, so from an expected value perspective, it is one. Unsure if existing ideas constitute the skeleton for a viable solution. Most people are nowhere near concerned enough but Yudkowsky and co are irrationally doomy. I think without serious alignment efforts we have roughly coin-flip odds of survival when superintelligence happens (which it will, sometime this century, probably between 2040 and 2070), but the possibility of alignment being efforts being both serious and fruitful bring extinction odds down to ~35%.

Early ChatGPT was misaligned in that the developers clearly intended certain behavoirs to be off-limits but it could be easily manipulated into them. This is still true to a lesser extent. I don't think this has caused real problems though - people using it to imitate real people online is a problem but since 'talk like a human about a topic' is intended functionality this is not misalignment, even if the creators consider it misuse.

We know so little that it's hard to answer

We won't solve it. We may or may not achieve AGI/ASI.

We're probably fucked and there's nothing I can realistically do about it other than convincing my friends to hate the antichrist, so that's what I do

We’re safe for now but we’re going too fast

Why is a kitten better at aligning humans than a piglet? What better choices could the piglet have made?

X-risk/x-near-certainty.

You concern yourself

alignment is impossible by definition, but AI is not a serious threat

bad problem, will be solved with social controls

big deal

dunno

humanity's failure or success will not be based on easily predictable issues

i think it’s a serious problem but not an existential threat and that a lot of very smart and talented people are working on solution. i don’t think it’ll wipe us out but it will be hard to fix

it is fundamentally unsolvable. we will all die sooner or later.

maybe impossible, but definitely beyond our means

p(doom) around 0.15 ish with large uncertainty

I'm not worried about near-term GPTs, and I think scaling just happens to be about to get harder and slower, which should buy us time.

I expect it to be easy to get AIs 99% of the way to something we consider sufficiently aligned. It is easy to convey the broad idea of what humans consider catastrophical, such that it takes a combination intent and big tech resources to get into trouble within the next ~10 years at least. Which is unlikely, though not impossible.

I expect the last 1% of trouble to be solveable by counteracting the AI's better intelligence with sufficient preparation at the same time that we keep improving our alignment efforts.

The cleverest in the world cannot escape sufficient preparation (I expect this to be controversial, but I think preparation is vastly undervalued).

I think we will get multiple tries, and likely won't die on the first fuckup, because of preparation.

serious, hard to solve, will have a solution will take longer than we think,

size of problem is overstated in the rationalist community; it's only through practical experience with increasingly capable AI systems that progress on the control problem will be made (I believe theoretical approaches will be largely a dead-end due to the irreducible nature of information integration/intelligence)

very unlikely to ever cause an existential threat. I doubt an intelligence explosion ever happening. AI safety research is still useful. Need to focus significant amounts of recourses of short-term AI risks (unemployment, misinformation, etc.)

~80% chance of human extinction or S-risk within 50 years.

🤷♂️As you can see, the opinions run the gamut from, “It’s not a problem at all,” to, “We’re all gonna die with ~100% certainty.” Most people do take the problem seriously, but there’s disagreement on how likely the worst-case scenarios are, and even on what they are (x-risk vs s-risk). Timelines on when AI will become powerful enough to be dangerous also varied, from some timelines so short that they seem insane to me, to ones predicting it will happen late into this century.

There were a lot of opinions on how difficult the problem is. Some think it’s completely unsolvable, but many think that, while it might be impossible to completely eliminate misaligned incentives from AI, it won’t be hard to get the problem close enough to completely solved that it doesn’t cause any huge issues. Others thought we are on track to solve it, or will at least be prepared to deal with the consequences if we don’t. One idea that stuck out to me was from one of the later comments, which mentioned that practical experience will be much more useful than theoretical approaches in solving the problem. This is an interesting point, since most of the attempts to solve the problem completely seem to be theoretical. Perhaps more practical experience as AI becomes a more common part of life will help solve it.

A lot of people also called into question the assumptions that AI doomerism is based on, like the idea of a recursively improving AGI smarter than humans almost immediately becoming too powerful for us to control, the idea that we would have no ability to contain a superintelligence despite preparing for it, the idea that we only have one chance at solving alignment, and the orthogonality thesis.

Most people didn’t think misaligned AI is currently causing major problems, though this might just be because the frame of reference they’re comparing it to is, “AI kills everyone.” I think there’s a good argument to be made that a lot of problems much bigger than, “This chatbot said things it wasn’t supposed to,” are caused by misaligned AI (e.g., predictive policing algorithms that aren’t programmed to factor in race but end up being racist anyway; social media algorithms that are only told to promote “engagement” but end up promoting whatever will make the most people angry), but no one really mentioned these in their responses.

In conclusion, “AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA.”

Stay tuned for next time, when I’ll release the responses to all remaining questions on the survey, including the reveal of what the “secret final section” is.